lightrush

1w ago

•

100%

lightrush

1w ago

•

100%

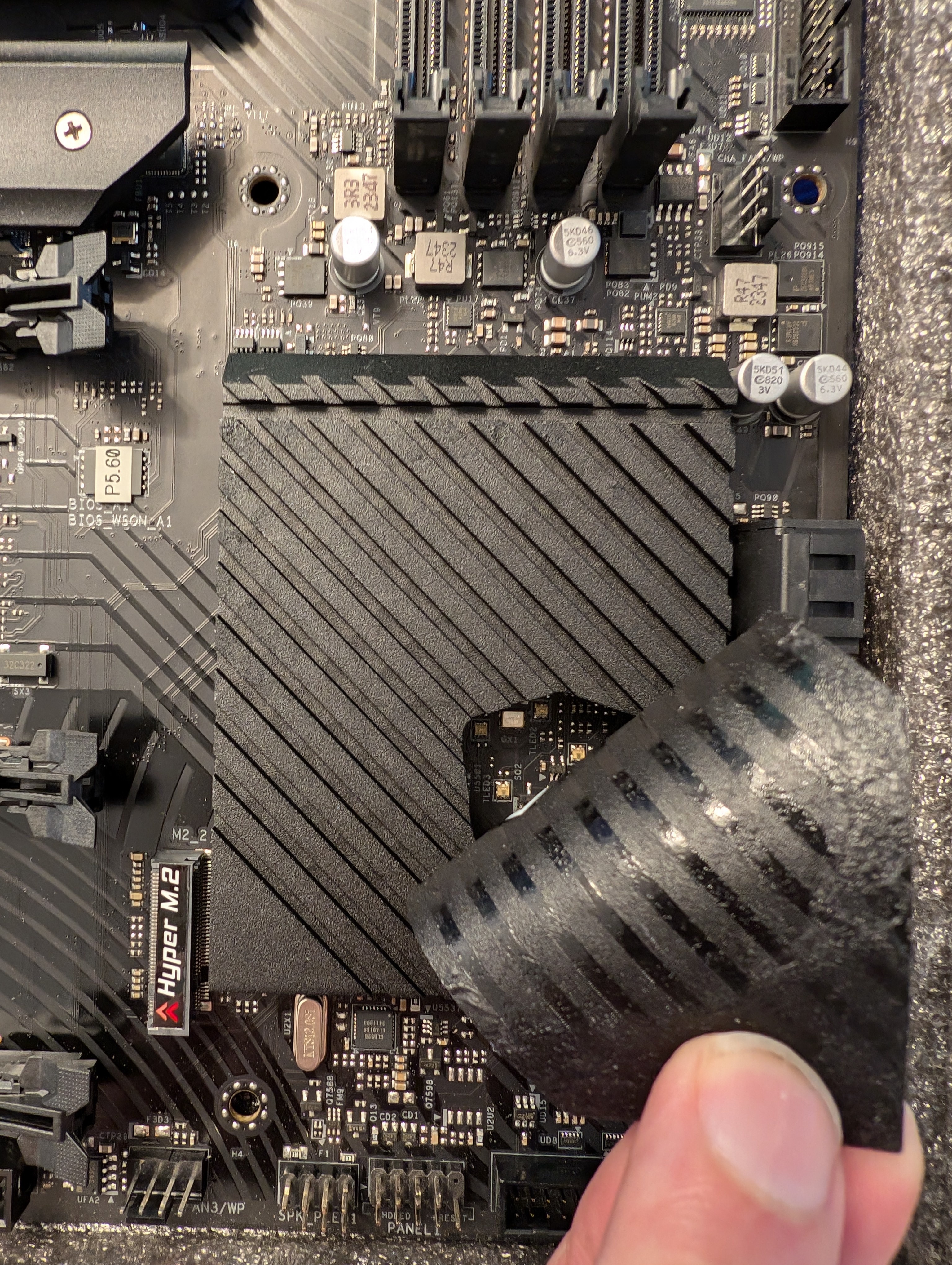

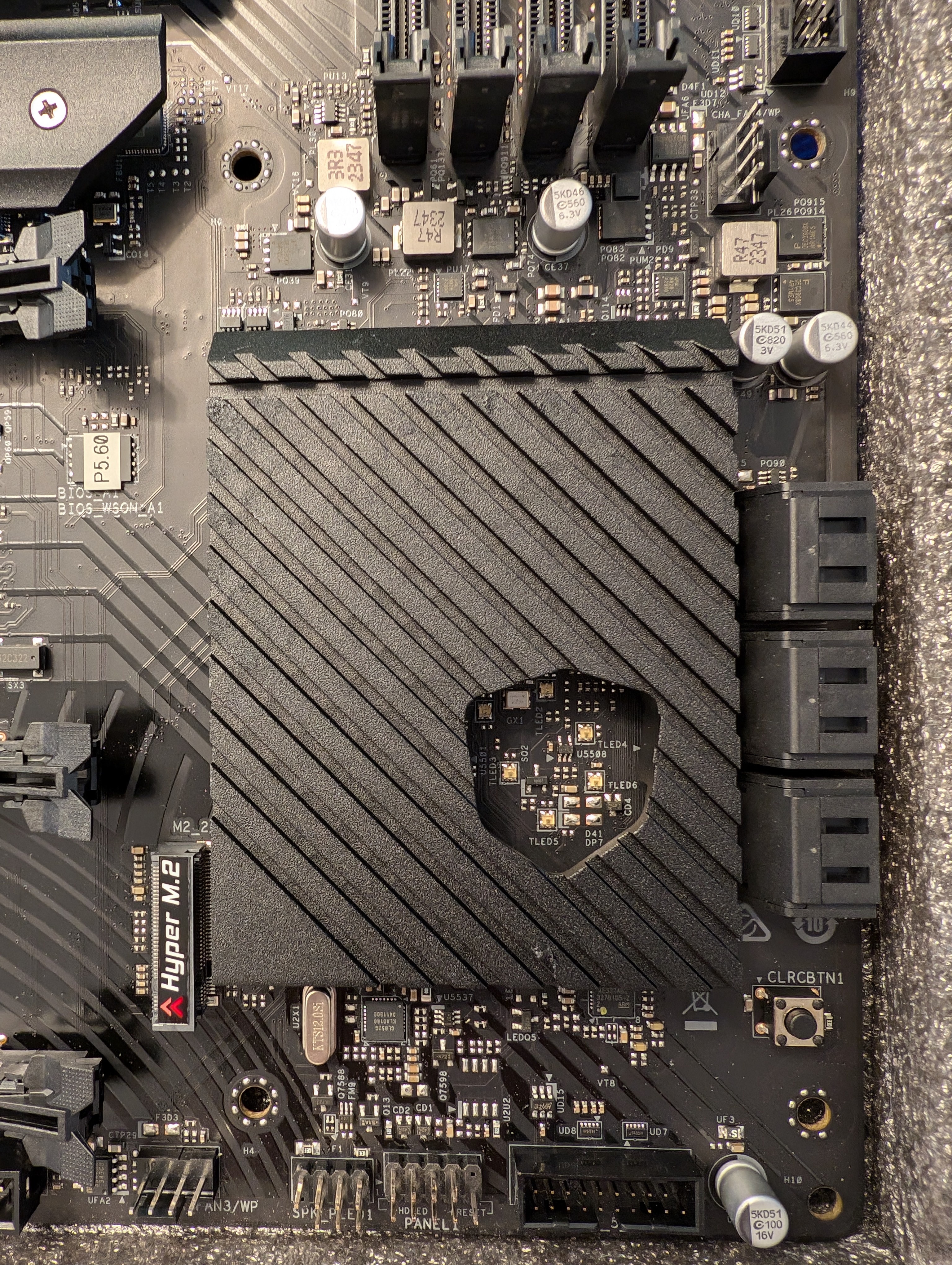

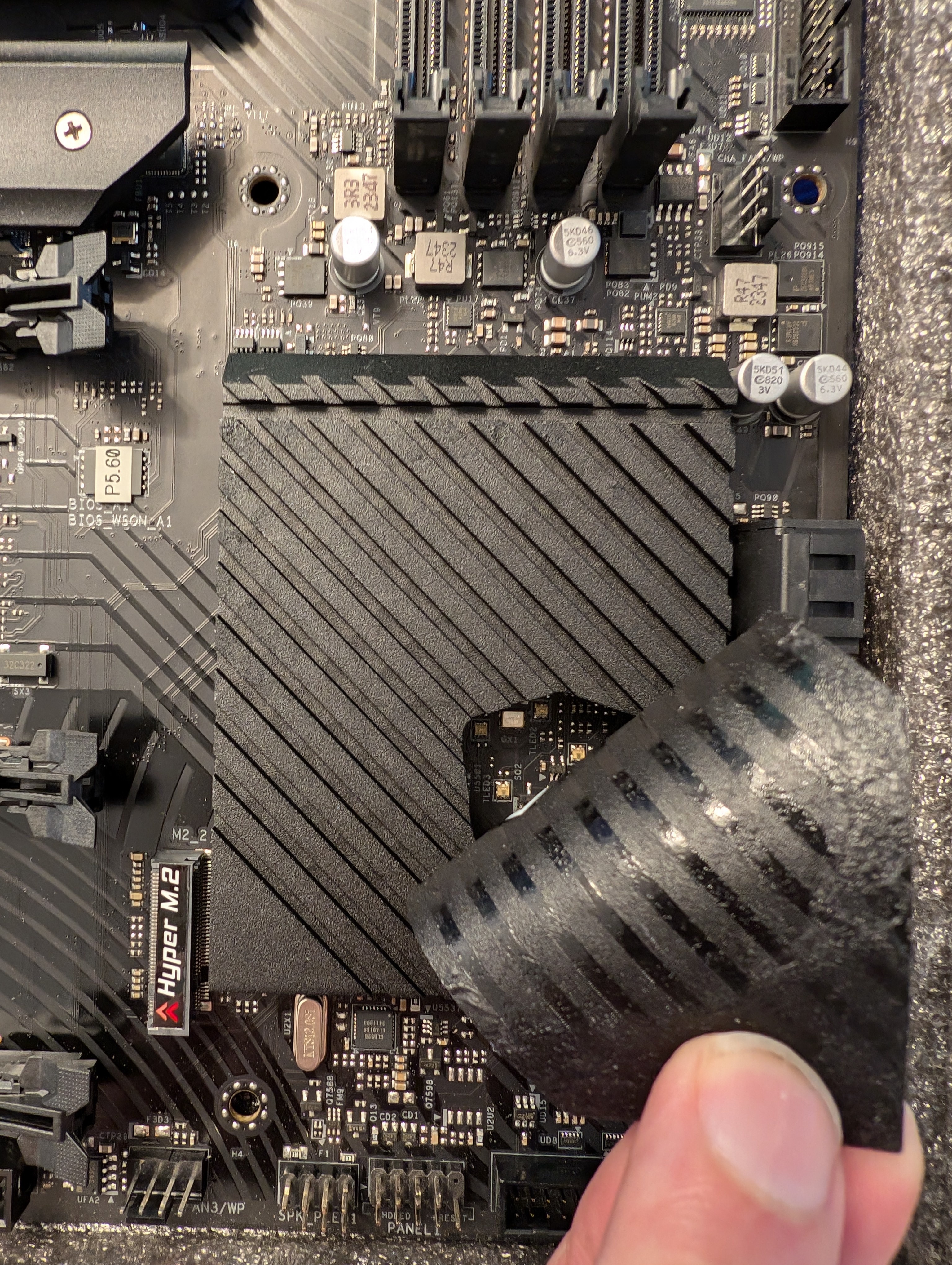

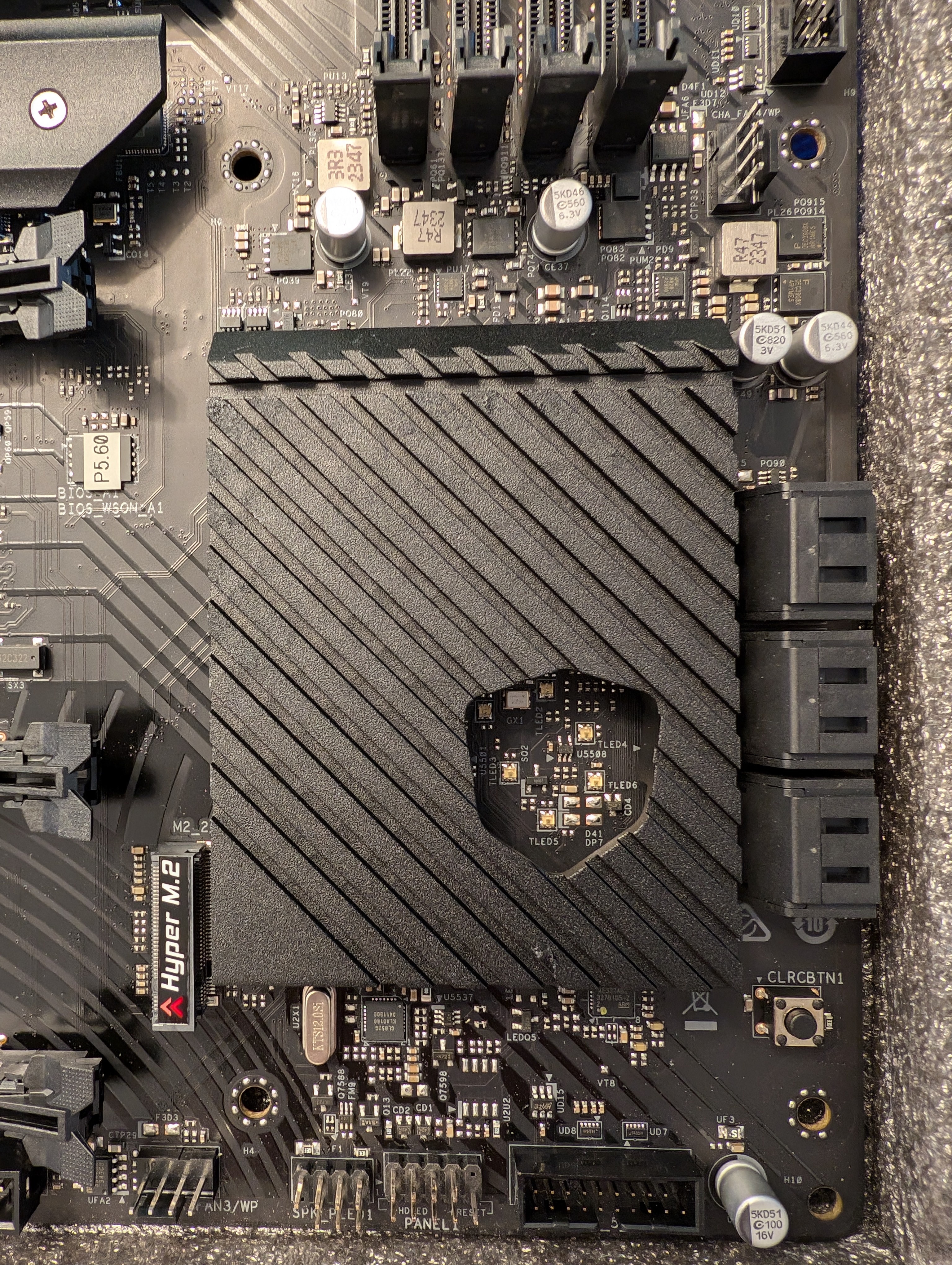

It's terrible. With that said this ASRock isn't that offensive. Other than this thick plastic sheet, the rest looked fine. Very little offensive RGB.

lightrush

1w ago

•

66%

lightrush

1w ago

•

66%

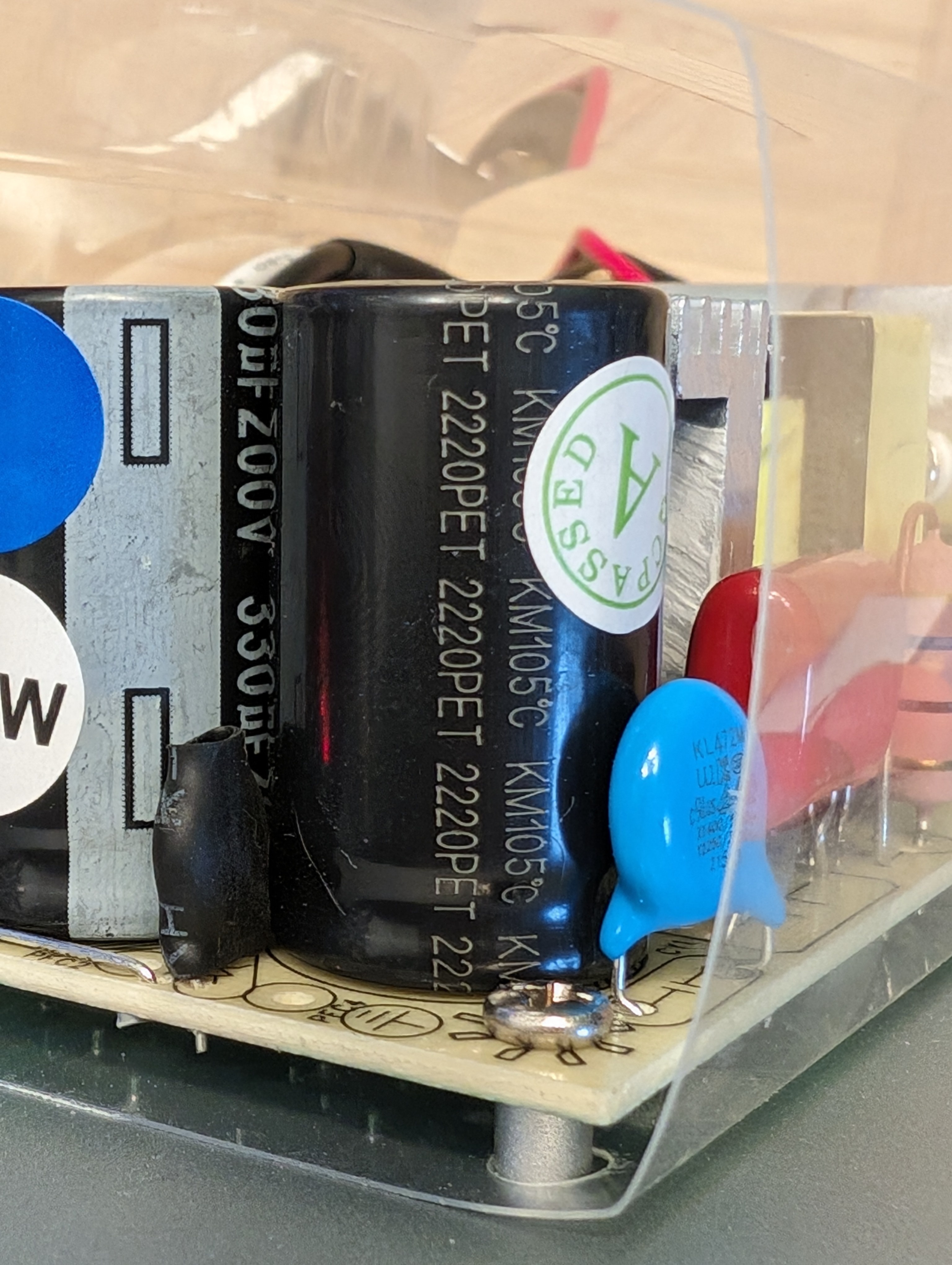

While true for the component itself, there's material difference for any caps surrounding it. Sure the chipset would work fine at 40, 50, 70°C. However electrolytic capacitors lifespan is halved with every 10°C temperature increase. From a brief search it seems solid caps also crap out much faster at higher temps but can outlast electrolytic at lower temps. This is a consideration for a long lifespan system. The one in my case is expected to operate till 2032 or beyond.

I don't think other components degrade in any significant fashion whether they run at 40 or 60°C.

lightrush

1w ago

•

88%

lightrush

1w ago

•

88%

Unfortunately I didn't take before/after measurements but this thick plastic sheet cannot be good for the chipset thermals. 🥲

cross-posted from: https://lemmy.ca/post/30850573 >  > > > >  > > > >

lightrush

2w ago

•

100%

lightrush

2w ago

•

100%

Huh, interesting. I had no idea. Well I don't need it to be anywhere faster than 100M. USB2 can definitely do that. Closer to 200M. With that said, the latency is more important if you're gonna do anything like Steam Link.

lightrush

2w ago

•

100%

lightrush

2w ago

•

100%

Ads? I thought the Shield didn't have any..

lightrush

2w ago

•

100%

lightrush

2w ago

•

100%

It's a Chromecast with Google TV (CCwGTV) wich itself is a dongle. It's connected to a Plugable USB-C PD hub - another dongle. The white bit sticking to the left from the hub is a TP-Link UE300 Ethernet adapter - yet another dongle. Finally there's an SD card inserted in the Plugable hub as well as an HDMI coupler hooked to the other end of the CCwGTV which allows connecting an HDMI cable to it.

lightrush

2w ago

•

100%

lightrush

2w ago

•

100%

I've been thinking about Shield TV but I hear it's not great with updates.

cross-posted from: https://lemmy.ca/post/30593626 > #donglelife Apparently this isn't allowed in !Android and should go here instead. A Chromecast with Google TV connected to a USB-C hub, connected to an Ethernet dongle, connected to an HDMI coupler.

lightrush

1mo ago

•

100%

lightrush

1mo ago

•

100%

It is. I just wish it wasn't this expensive. Will have to live with it for a while. 😅

lightrush

1mo ago

•

100%

lightrush

1mo ago

•

100%

So generally Pegatron. :D I used to buy GB because it was made in Taiwan when ASUS became Pegatron and went to China. Their quality decreased. GB used to put high quality components on their boards in comparison. But now GB is also made somewhere in the PRC. I've no idea where MSI are in terms of quality. We used to make fun of them using the worst capacitors back in the 90s/00s. Looking at their Newegg reviews, their 1-star ratings seem lower proportion compared to Pegatron brands and GB. Maybe they're nicer these days? The X570 replacement I got for this machine is an ASUS - "TUF" 🙄

lightrush

1mo ago

•

100%

lightrush

1mo ago

•

100%

They feel a bit like a mix between DSA and laptop keycaps.

lightrush

1mo ago

•

100%

lightrush

1mo ago

•

100%

What do you buy?

lightrush

1mo ago

•

100%

lightrush

1mo ago

•

100%

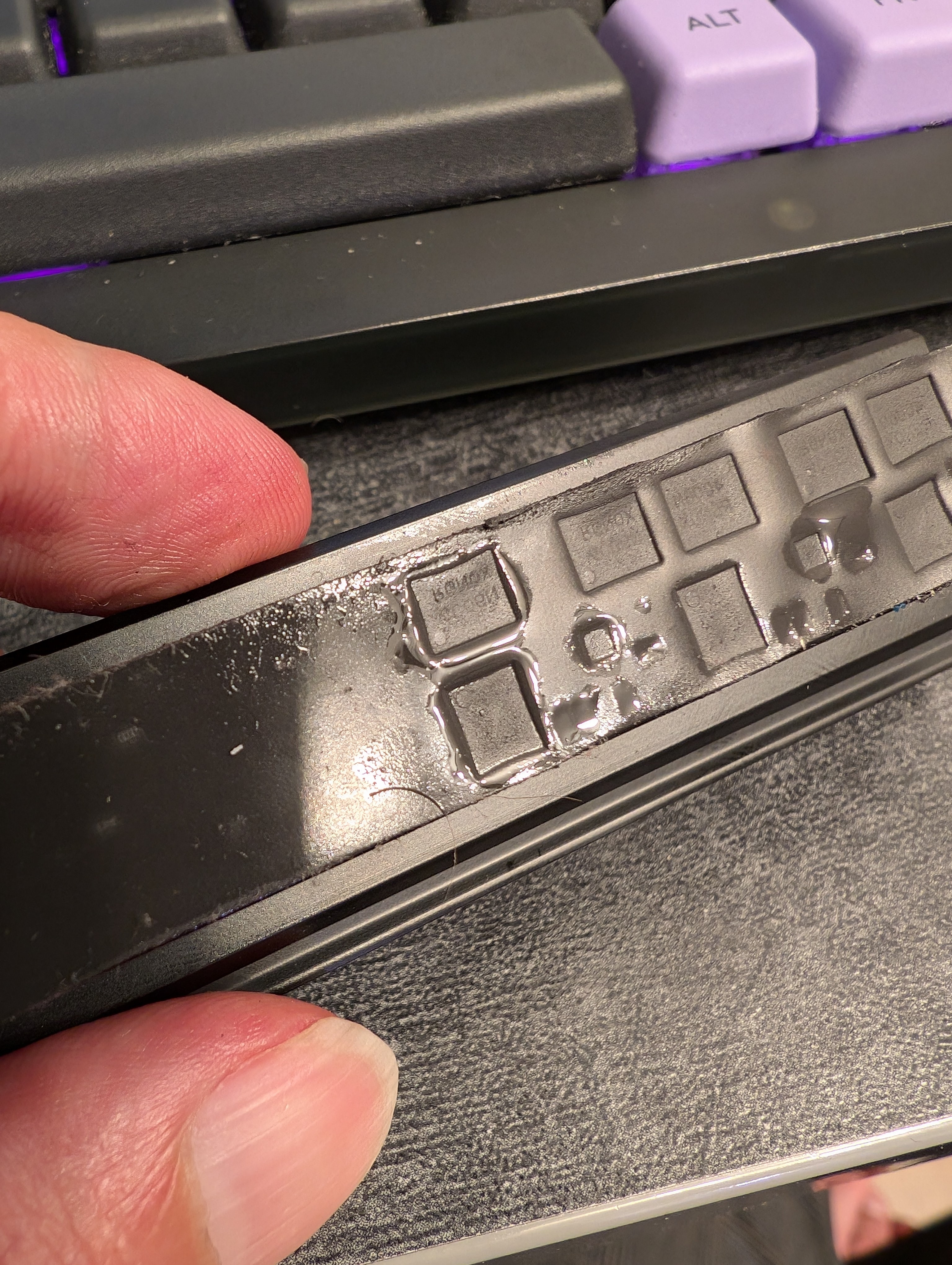

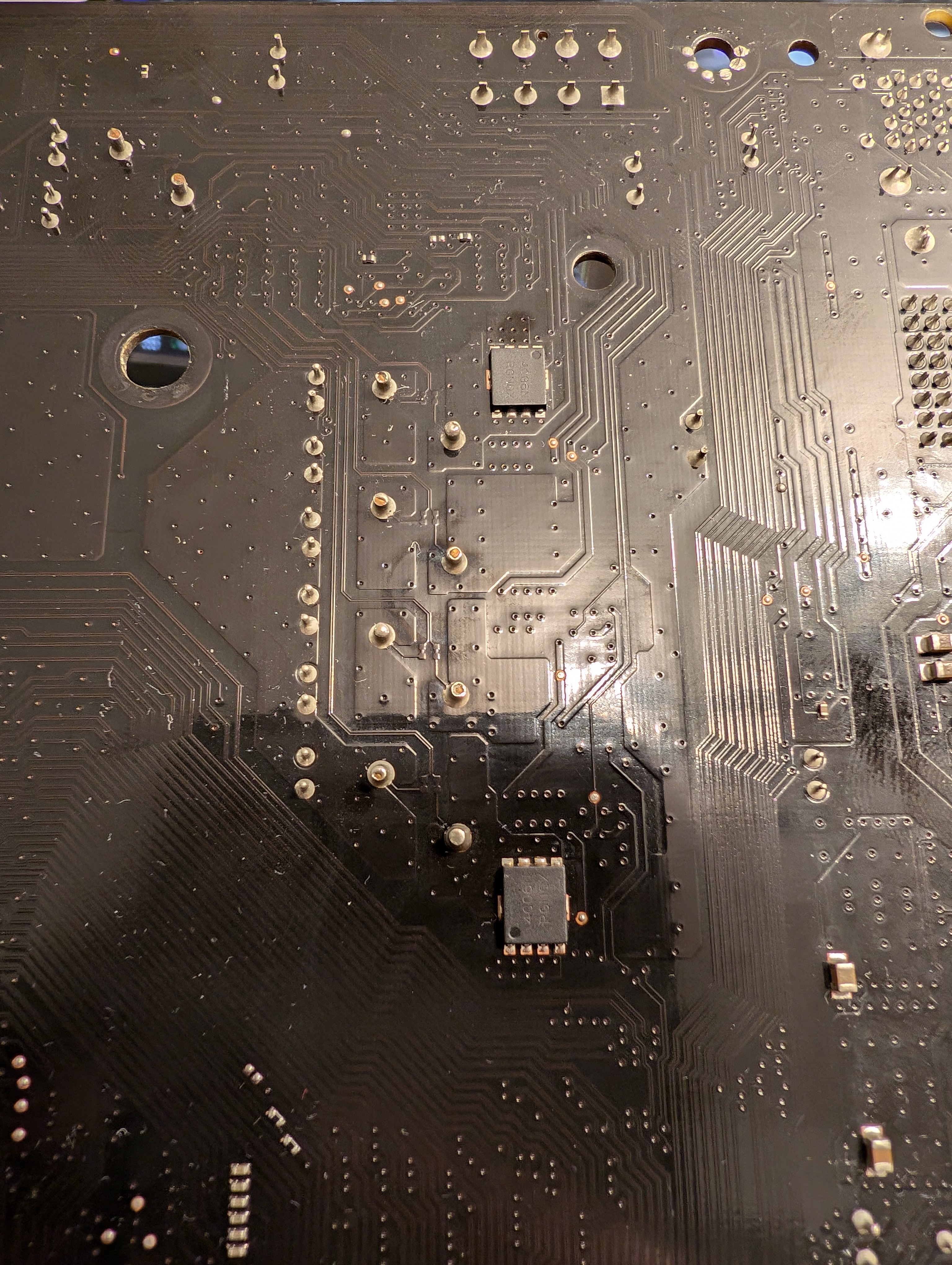

I think I found the source of the liquid @abcdqfr@lemmy.world. The thermal pad under the VRM heatsink has begun to liquefy into oily substance. This substance appears to have gone to the underside of the board through the vias around the VRM and discolored itself.

Some rubbing with isopropyl alcohol and it's almost gone:

Perhaps there's still life left in this board if used with an older chip.

lightrush

1mo ago

•

100%

lightrush

1mo ago

•

100%

I think the board has reached the end of the road. 😅

lightrush

1mo ago

•

95%

lightrush

1mo ago

•

95%

Hard to say. She's been in 24/7 service since 2017. Never had stability issues and I've tested it with Prime95 plenty of times upon upgrades. Last week I ran a Llama model and the computer froze hard. Even holding the power button wouldn't turn it off. Did the PSU power flip, came back up. Prime95 stable. Llama -> rip. Perhaps it's been cooked for a while and only trips by this workload. She's an old board, a Gigabyte with B350 running a 5950X (for a couple of years), so it's not super surprising that the power section has been a bit overused. 😅 Replacing with an X570 as we speak.

lightrush

1mo ago

•

100%

lightrush

1mo ago

•

100%

Funny enough, I can't detect the smell from hell. Could be COVID.

lightrush

1mo ago

•

100%

lightrush

1mo ago

•

100%

Iiinteresting. I'm on the larger AB350-Gaming 3 and it's got REV: 1.0 printed on it. No problems with the 5950X so far. 🤐 Either sheer luck or there could have been updated units before they officially changed the rev marking.

lightrush

1mo ago

•

100%

lightrush

1mo ago

•

100%

I am slightly offended by other people believing in God but I generally keep it to myself. 😂

lightrush

1mo ago

•

100%

lightrush

1mo ago

•

100%

On paper it should support it. I'm assuming it's the ASRock AB350M. With a certain BIOS version of course. What's wrong with it?

lightrush

1mo ago

•

100%

lightrush

1mo ago

•

100%

B350 isn’t a very fast chipset to begin with

For sure.

I’m willing to bet the CPU in such a motherboard isn’t exactly current-gen either.

Reasonable bet, but it's a Ryzen 9 5950X with 64GB of RAM. I'm pretty proud of how far I've managed to stretch this board. 😆 At this point I'm waiting for blown caps, but the case temp is pretty low so it may end up trucking along for surprisingly long time.

Are you sure you’re even running at PCIe 3.0 speeds too?

So given the CPU, it should be PCIe 3.0, but that doesn't remove any of the queues/scheduling suspicions for the chipset.

I'm now replicating data out of this pool and the read load looks perfectly balanced. Bandwidth's fine too. I think I have no choice but to benchmark the disks individually outside of ZFS once I'm done with this operation in order to figure out whether any show problems. If not, they'll go in the spares bin.

discourse.practicalzfs.com

discourse.practicalzfs.com

I'm syncoiding from my normal RAIDz2 to a backup mirror made of 2 disks. I looked at `zpool iostat` and I noticed that one of the disks consistently shows less than half the write IOPS of the other: ``` capacity operations bandwidth pool alloc free read write read write ------------------------------------ ----- ----- ----- ----- ----- ----- storage-volume-backup 5.03T 11.3T 0 867 0 330M mirror-0 5.03T 11.3T 0 867 0 330M wwn-0x5000c500e8736faf - - 0 212 0 164M wwn-0x5000c500e8737337 - - 0 654 0 165M ``` This is also evident in `iostat`: ``` f/s f_await aqu-sz %util Device 0.00 0.00 3.48 46.2% sda 0.00 0.00 8.10 99.7% sdb ``` The difference is also evident in the temperatures of the disks. The busier disk is 4 degrees warmer than the other. The disks are identical on paper and bought at the same time. Is this behaviour expected?

UofT is closing the `[first.last]@mail.utoronto.ca` email accounts **for alumni.** You can get a new email account that will end in `@alumni.utoronto.ca` but this won't happen automatically. You **have to request** one. Check your current UTmail+ inbox for an email titled "Notice of Upcoming Email Account Closure"

I built a 5x 16TB RAIDz2, filled it with data, then I discovered the following. Sequentially reading a single file from the file system gave me around 40MB/s. Reading multiple in parallel brought the total throughput in the hundreds of megabytes - where I'd expect it. This is really weird. The 5 disks show 100% utilization during single file reads. Writes are supremely fast, whether single threaded or parallel. Reading directly from each disk gives >200MB/s. Splitting the the RAIDz2 into two RAIDz1s, or into one RAIDz1 and a mirror improved reads to 100 and something MB/s. Better but still not where it should be. I have an existing RAIDz1 made of 4x 8TB disks on the same machine. That one reads with 250-350MB/s. I made an equivalent 4x 16TB RAIDz1 from the new drives and that read with about 100MB/s. Much slower. All of this was done with `ashift=12` and default `recordsize`. The disks' datasheets say their block size is 4096. I decided to try RAIDz2 with `ashift=13` even though the disks really say they've got 4K physical block size. Lo and behold, the single file reads went to over 150MB/s. 🤔 Following from there, I got full throughput when I increased the `recordsize` to 1M. This produces full throughput even with `ashift=12`. My existing 4x 8TB RAIDz1 pools with `ashift=12` and `recordsize=128K` read single files *fast.* Here's a diff of the queue dump of the old and new drives. The left side is a WD 8TB from the existing RAIDz1, the right side is one of the new HC550 16TB ``` < max_hw_sectors_kb: 1024 --- > max_hw_sectors_kb: 512 20c20 < max_sectors_kb: 1024 --- > max_sectors_kb: 512 25c25 < nr_requests: 2 --- > nr_requests: 60 36c36 < write_cache: write through --- > write_cache: write back 38c38 < write_zeroes_max_bytes: 0 --- > write_zeroes_max_bytes: 33550336 ``` Could the `max_*_sectors_kb` being half on the new drives have something to do with it? --- Can anyone make any sense of any of this?

I wasn't aware Steve Paikin and John McGrath had a podcast on Ontario politics. I like it!

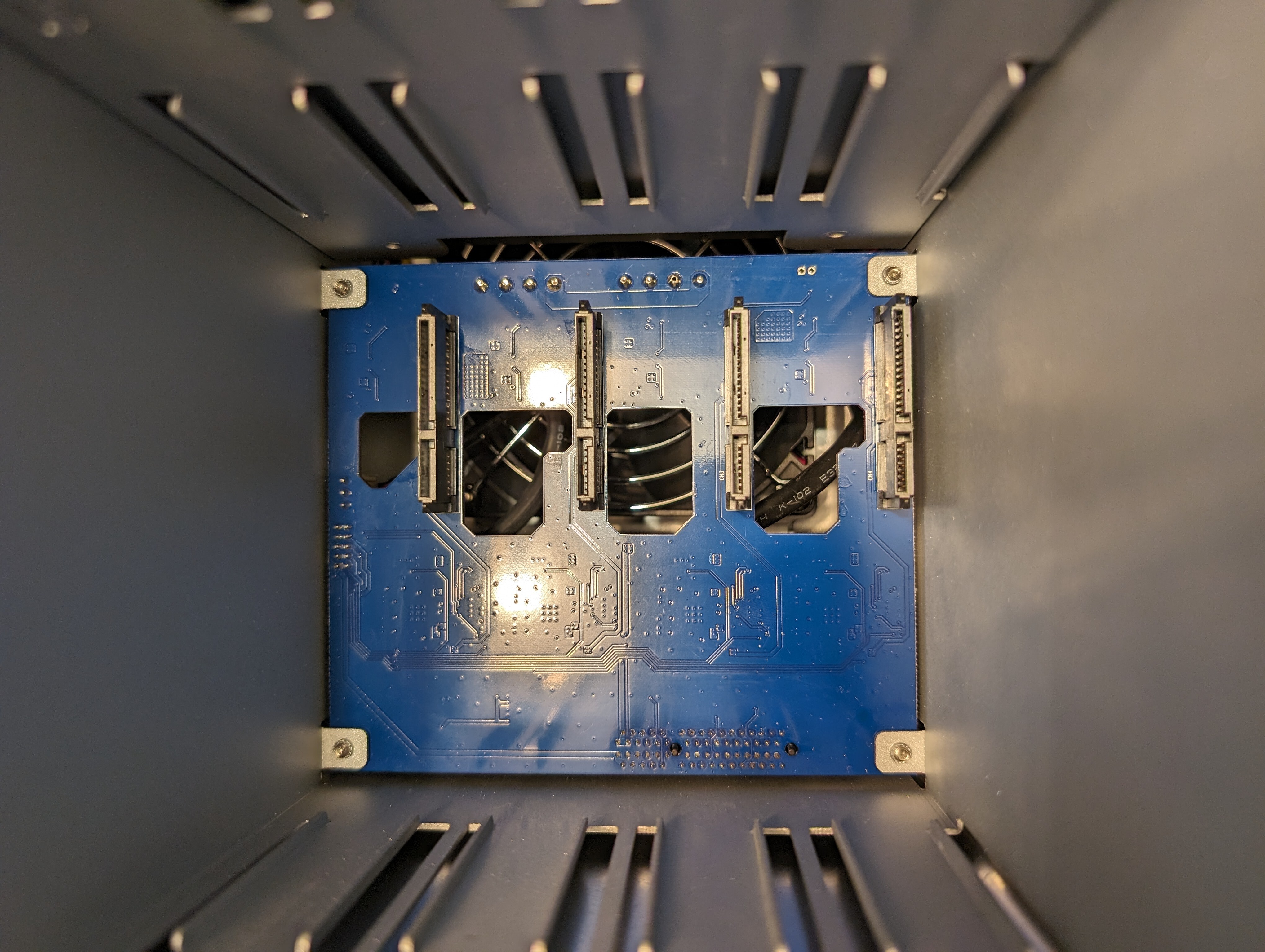

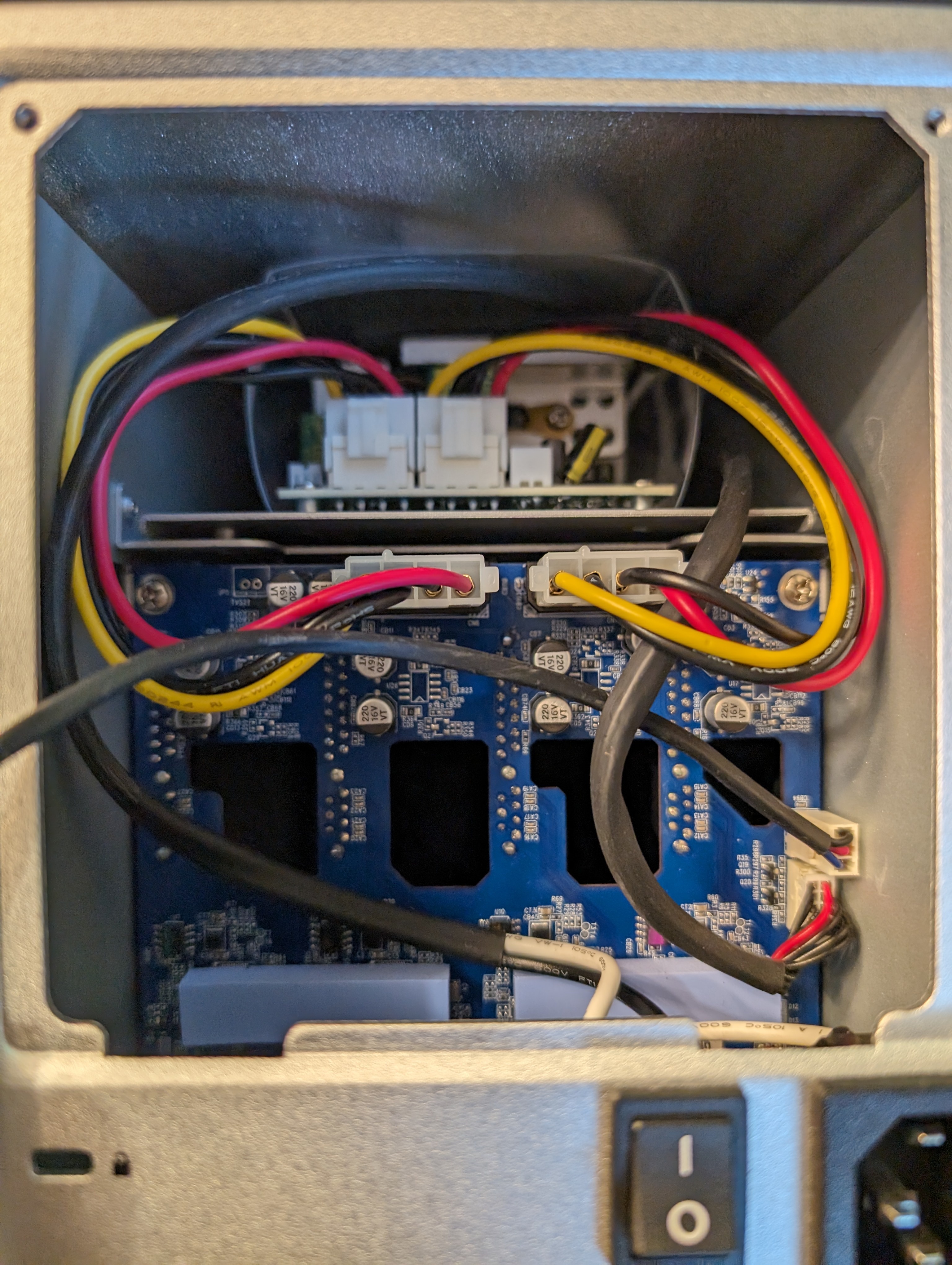

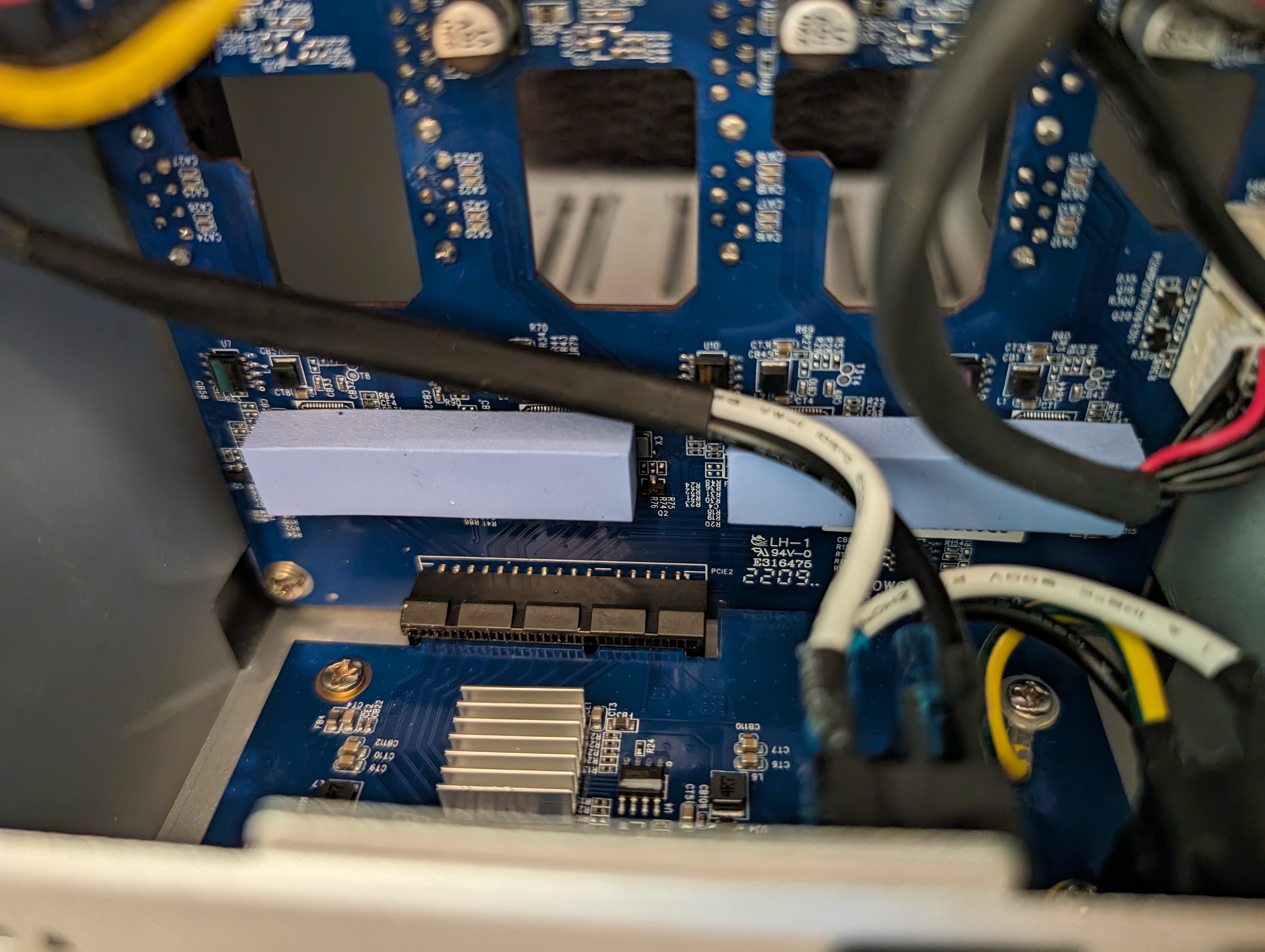

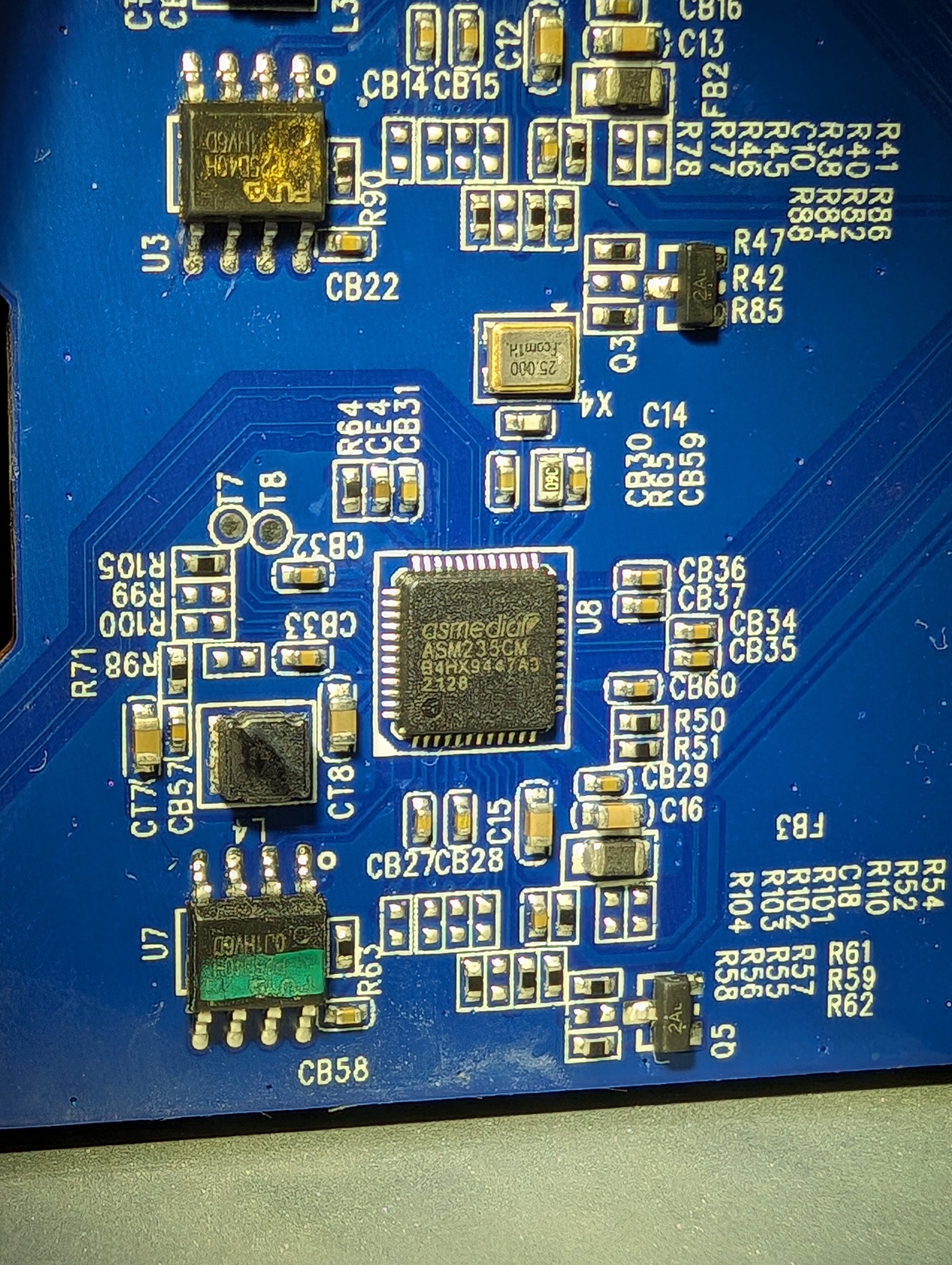

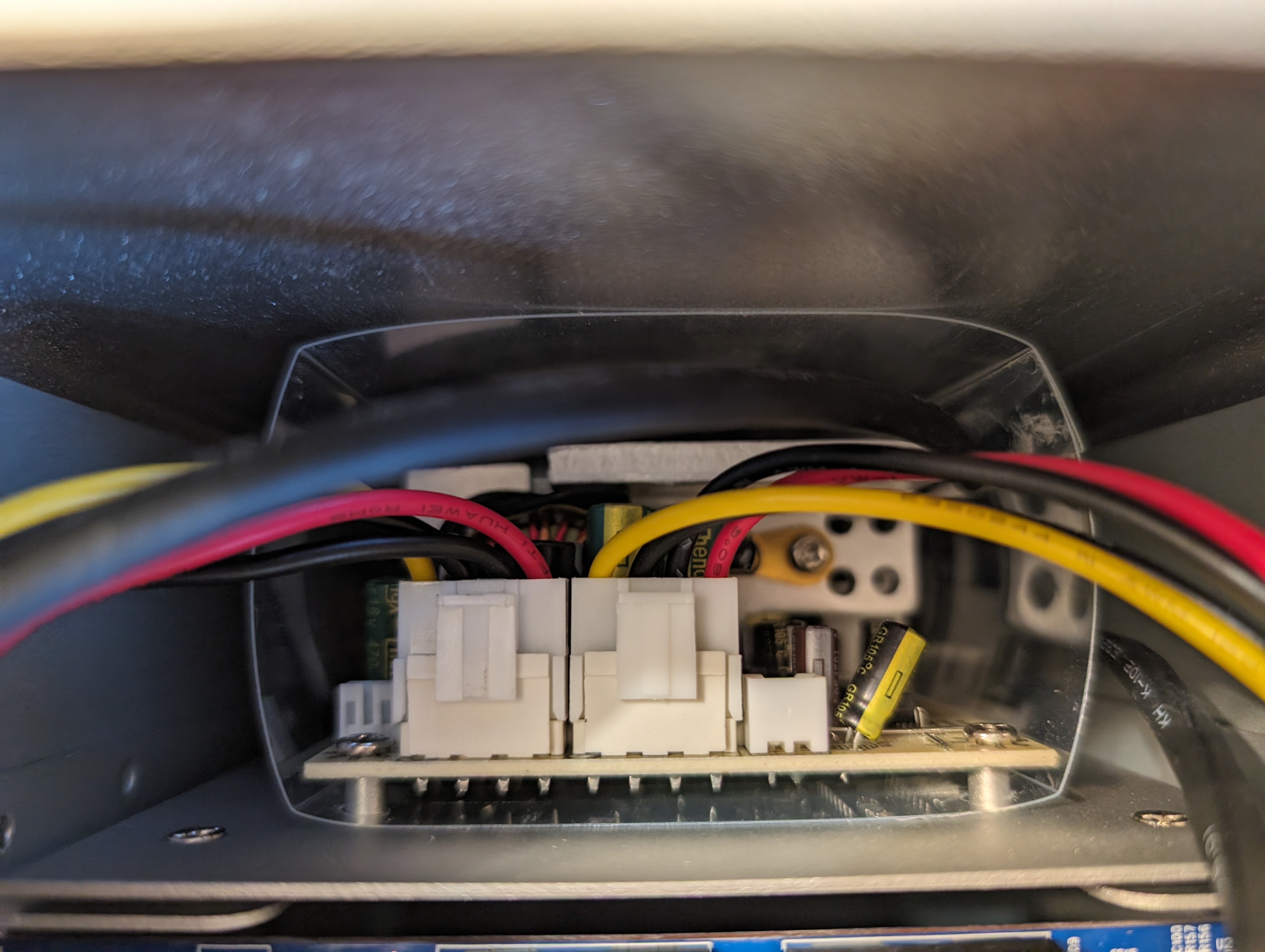

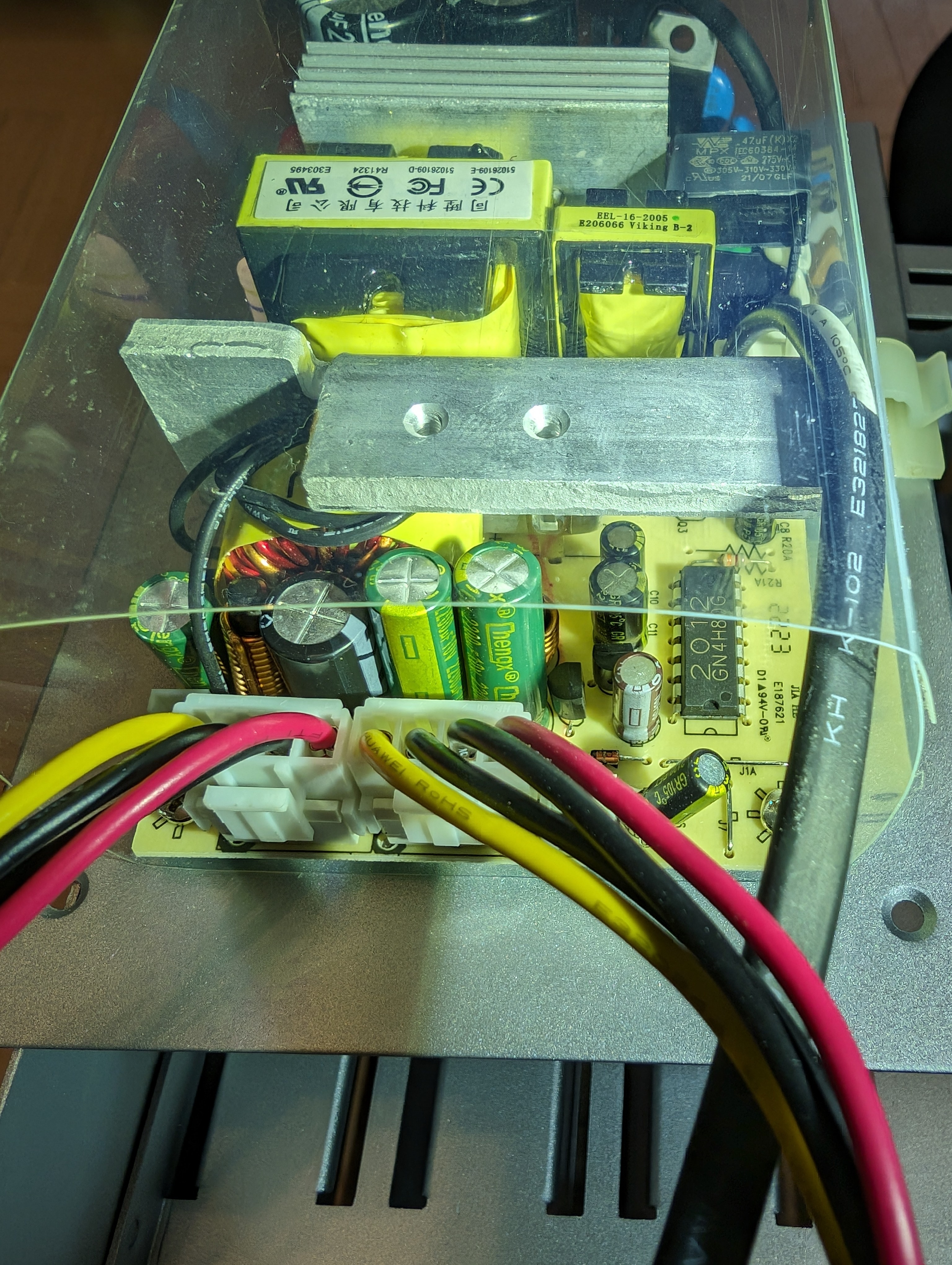

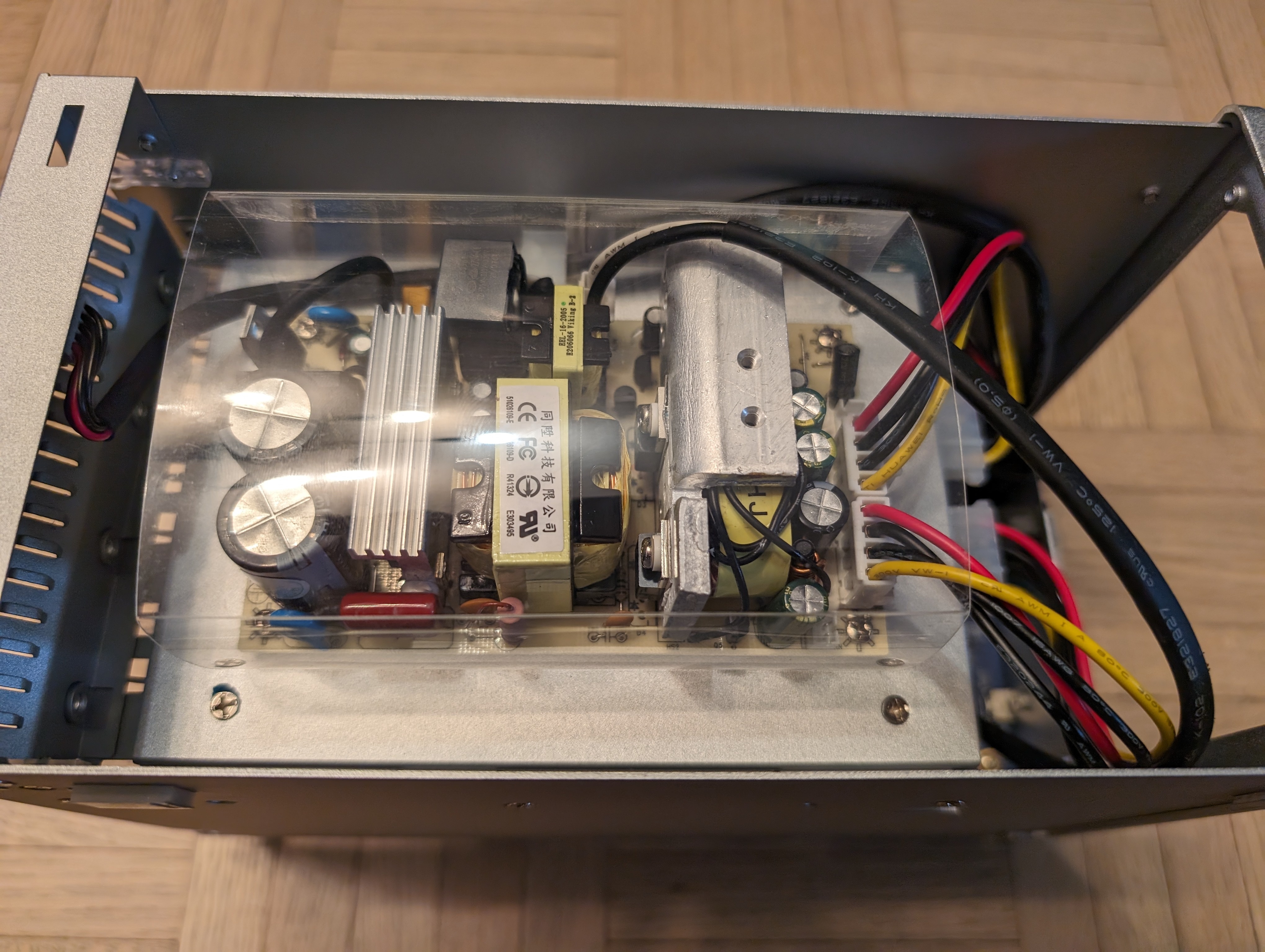

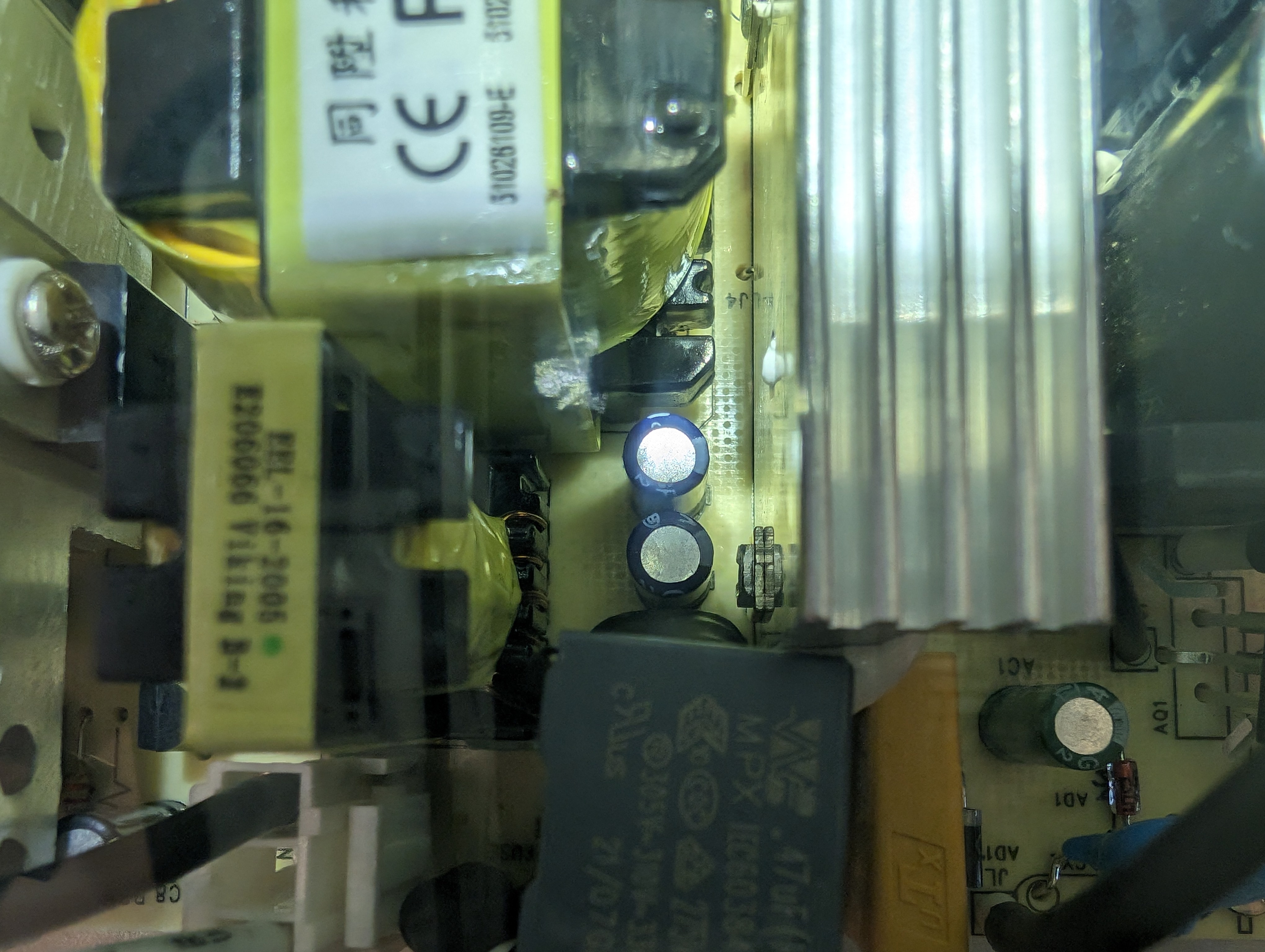

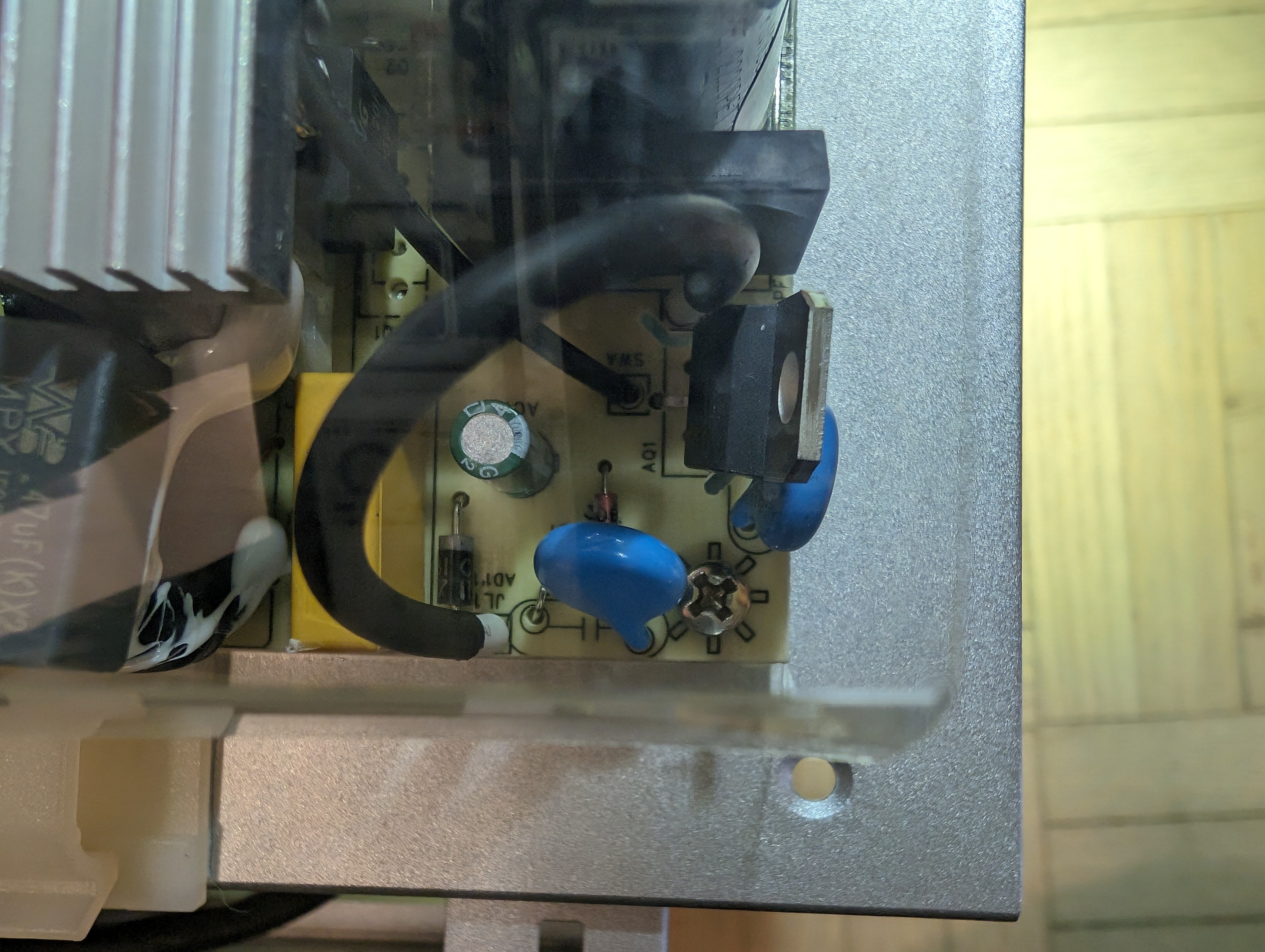

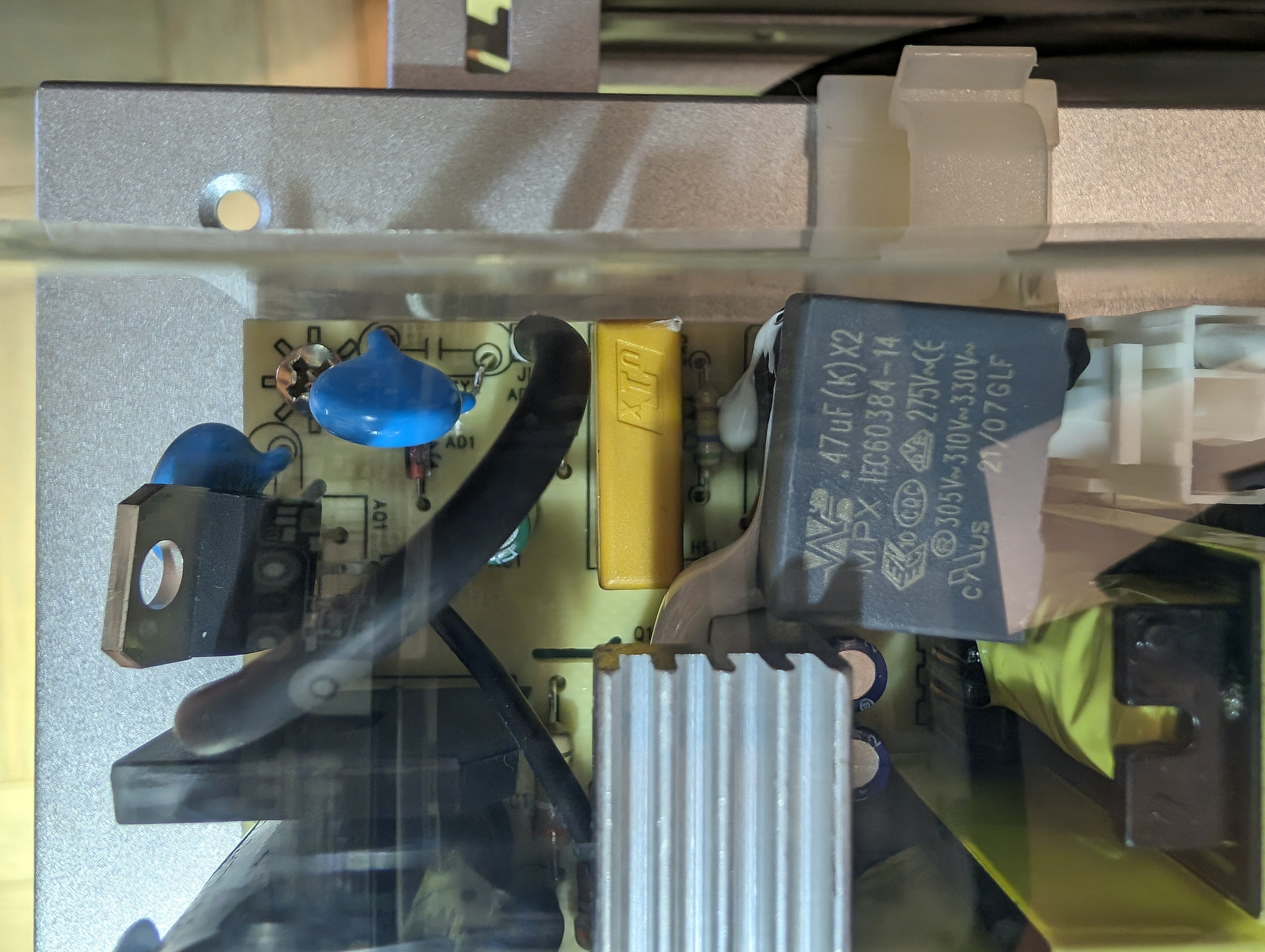

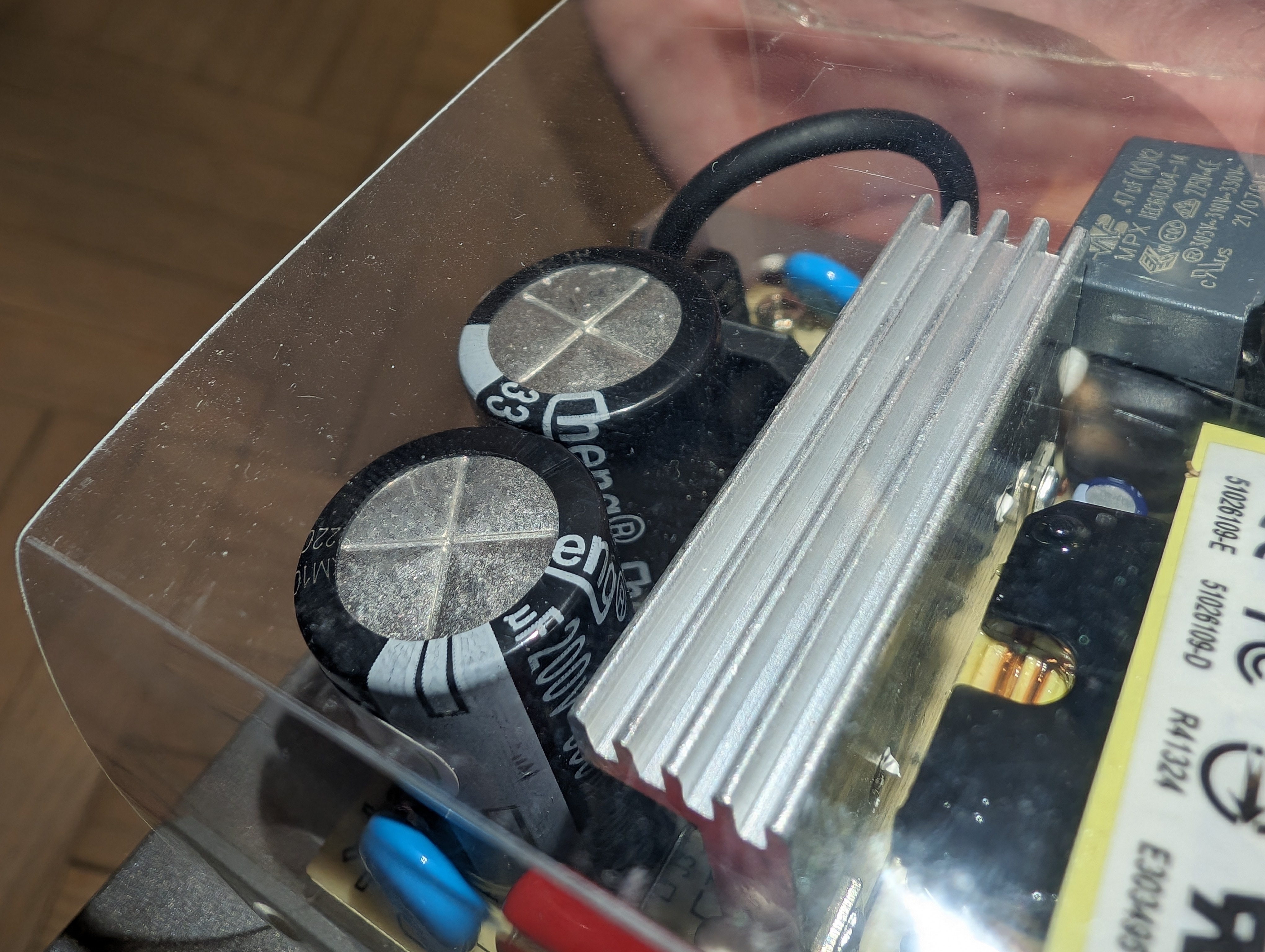

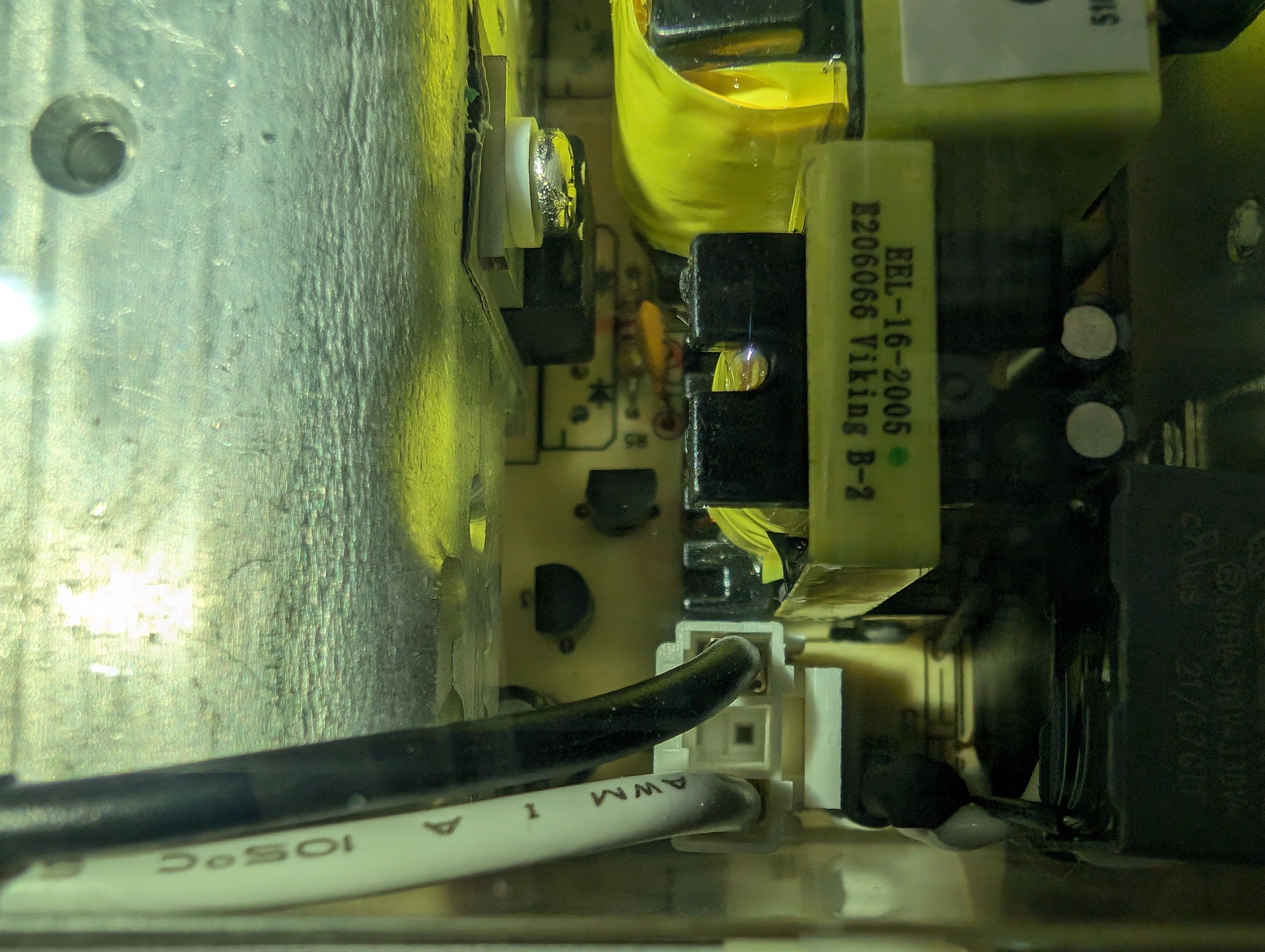

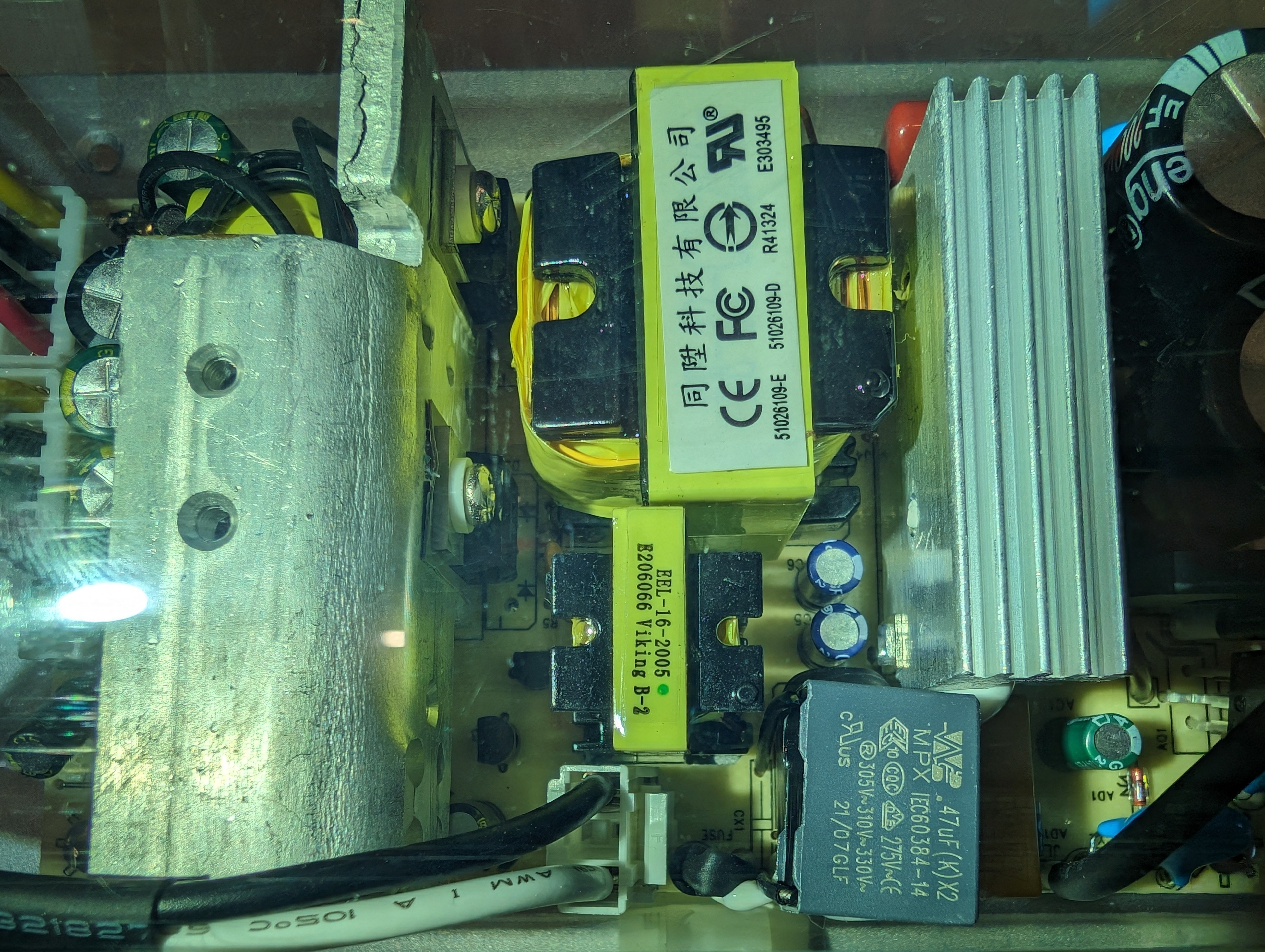

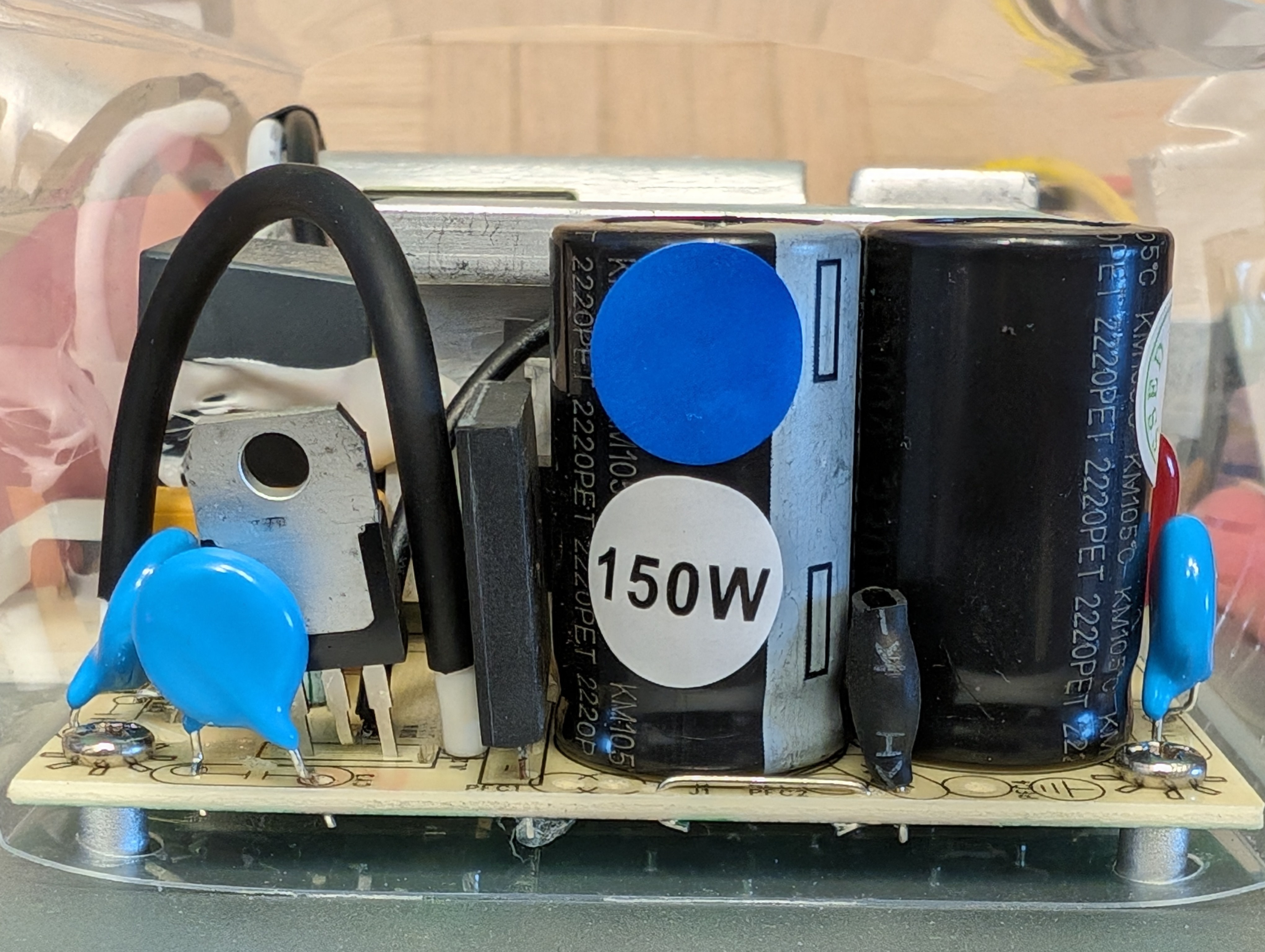

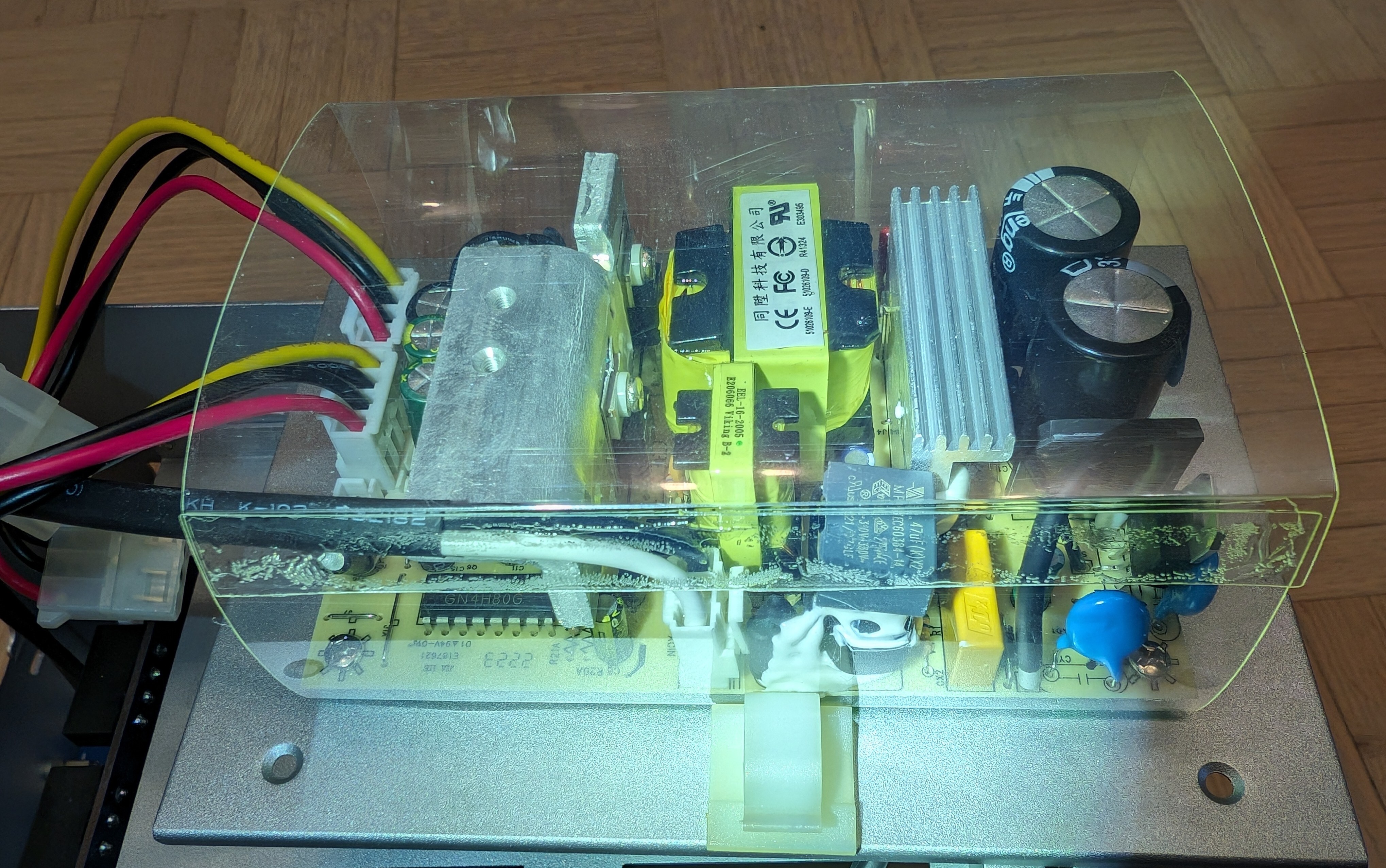

## Why I'm running a ZFS pool of 4 external USB drives. It's a mix of WD Elements and enclosed IronWolfs. I'm looking to consolidate it into a single box since I'm likely to add another 4 drives to it in the near future and dealing with 8 external drives could become a bit problematic in a few ways. ## ZFS with USB drives There's been recurrent questions about ZFS with USB. Does it work? How does it work? Is it recommended and so on. The answer is complicated but it revolves around - yes it works and it can work well **so long as you ensure that anything on your USB path is good.** And that's difficult since it's not generally known what USB-SATA bridge chipset an external USB drive has, whether it's got firmware bugs, whether it requires quirks, is it stable under sustained load etc. Then that difficulty is multiplied by the number of drives the system has. In my setup for example, I've swapped multiple enclosure models till I stumbled on a rock-solid one. I've also had to install heatsinks on the ASM1351 USB-SATA bridge ICs in the WD Elements drives to stop them from overheating and dropping dead under heavy load. With this in mind, if a multi-bay unit like the OWC Mercury Elite Pro Quad proves to be as reliable as some anecdotes say, it could become a go-to recommendation for USB DAS that eliminates a lot of those variables, leaving just the host side since it comes with a cable too. And the host side tends to be reliable since it's typically either Intel or AMD. Read ##Testing for some tidbits about AMD. ## Initial observations of the OWC Mercury Elite Pro Quad - Built like a tank, heavy enclosure, feet screwed-in not glued - Well designed for airflow. Air enters the front, goes through the disks, PSU, main PCB and exits from the back. Some IronWolf that averaged 55°C in individual enclosures clock at 43°C in here - It's got a Good Quality DC Fan (check pics). So far it's pretty quiet - Uses 4x ASM235CM USB-SATA bridge ICs which are found in other well-regarded USB enclosures. It's newer than the ASM1351 which is also reliable when not overheating - The USB-SATA bridges are wired to a USB 3.1 Gen 2 hub - VLI-822. No SATA port multipliers - The USB hub is heatsinked - The ASM235CM ICs have a weird thick thermal pad attached to them but without any metal attached to it. It appears they're serving as heatsinks themselves which might be enough for the ICs to stay within working temps - The main PCB is all-solid-cap affair - The PSU shows electrolytic caps which is unsurprising - The main PCB is connected to the PSU via standard molex connectors like the ones found in ATX PSUs. Therefore if the built-in PSU dies, it could be replaced with an ATX PSU - It appears to rename the drives to its own "Elite Pro Quad A/B/C/D" naming, however `hdparm -I /dev/sda` seems to return the original drive information. The disks appear with their internal designations in GNOME Disks. The kernel maps them in `/dev/disks/by-id/*` according to those as before. I moved my drives in it, rebooted and ZFS started the pool as if nothing happened - SMART info is visible in GNOME Disks as well as `smartctl -x /dev/sda` - It comes with both USB-C to USB-C cable and USB-C to USB A - Made in Taiwan ## Testing - No errors in the system logs so far - I'm able to pull 350-370MB/s sequential from my 4-disk RAIDz1 - Loading the 4 disks together with `hdparm` results in about 400MB/s total bandwidth - It's hooked up via USB 3.1 Gen 1 on a B350 motherboard. I don't see a significant difference in the observed speeds whether it's on the chipset-provided USB host, or the CPU-provided one - Completed a manual scrub of a 24TB RAIDz1 while also being loaded with an Immich backup, Plex usage, Syncthing rescans and some other services. No errors in the system log. Drives stayed under 44°C. Stability looks promising - Will pull a drive and add a new one to resilver once the latest changes get to the off-site backup - Pulled a drive from the pool and replaced it with a spare while the pool was live. SATA hot plugging seems to work. Resilvered 5.25TB in about 32 hours while the pool was in use. Found the following vomit in the logs repeating every few minutes: ``` Apr 01 00:31:08 host kernel: scsi host11: uas_eh_device_reset_handler start Apr 01 00:31:08 host kernel: usb 6-3.4: reset SuperSpeed USB device number 12 using xhci_hcd Apr 01 00:31:08 host kernel: scsi host11: uas_eh_device_reset_handler success Apr 01 00:32:42 host kernel: scsi host11: uas_eh_device_reset_handler start Apr 01 00:32:42 host kernel: usb 6-3.4: reset SuperSpeed USB device number 12 using xhci_hcd Apr 01 00:32:42 host kernel: scsi host11: uas_eh_device_reset_handler success Apr 01 00:33:54 host kernel: scsi host11: uas_eh_device_reset_handler start Apr 01 00:33:54 host kernel: usb 6-3.4: reset SuperSpeed USB device number 12 using xhci_hcd Apr 01 00:33:54 host kernel: scsi host11: uas_eh_device_reset_handler success Apr 01 00:35:07 host kernel: scsi host11: uas_eh_device_reset_handler start Apr 01 00:35:07 host kernel: usb 6-3.4: reset SuperSpeed USB device number 12 using xhci_hcd Apr 01 00:35:07 host kernel: scsi host11: uas_eh_device_reset_handler success Apr 01 00:36:38 host kernel: scsi host11: uas_eh_device_reset_handler start Apr 01 00:36:38 host kernel: usb 6-3.4: reset SuperSpeed USB device number 12 using xhci_hcd Apr 01 00:36:38 host kernel: scsi host11: uas_eh_device_reset_handler success ``` It appears to be only related to the drive being resilvered. I did not observe resilver errors - Resilvering `iostat` shows numbers in-line with the 500MB/s of the the USB 3.1 Gen 1 port it's connected to: ``` tps kB_read/s kB_wrtn/s kB_dscd/s kB_read kB_wrtn kB_dscd Device 314.60 119.9M 95.2k 0.0k 599.4M 476.0k 0.0k sda 264.00 119.2M 92.0k 0.0k 595.9M 460.0k 0.0k sdb 411.00 119.9M 96.0k 0.0k 599.7M 480.0k 0.0k sdc 459.40 0.0k 120.0M 0.0k 0.0k 600.0M 0.0k sdd ``` - Running a second resilver on a chipset-provided USB 3.1 port while looking for USB resets like previously seen in the logs. The hypothesis is that here's instability with the CPU-provided USB 3.1 ports as there have been documented problems with those * I had the new drive disconnect upon KVM switch, where the KVM is connected to the same same chipset-provided USB controller. Moved the KVM to the CPU-provided controller. This is getting fun * Got the same resets as the drive began the sequential write phase: ``` Apr 02 16:13:47 host kernel: scsi host11: uas_eh_device_reset_handler start Apr 02 16:13:47 host kernel: usb 6-2.4: reset SuperSpeed USB device number 9 using xhci_hcd Apr 02 16:13:47 host kernel: scsi host11: uas_eh_device_reset_handler success ``` * 🤦 It appears that I read the manual wrong. All the 3.1 Gen 1 ports on the back IO are CPU-provided. Moving to a chipset-provided port for real and retesting... The resilver entered its sequential write phase and there's been no resets so far. The peak speeds are a tad higher too: ``` tps kB_read/s kB_wrtn/s kB_dscd/s kB_read kB_wrtn kB_dscd Device 281.80 130.7M 63.2k 0.0k 653.6M 316.0k 0.0k sda 273.00 130.1M 56.8k 0.0k 650.7M 284.0k 0.0k sdb 353.60 130.8M 63.2k 0.0k 654.0M 316.0k 0.0k sdc 546.00 0.0k 133.2M 0.0k 0.0k 665.8M 0.0k sdd ``` * Resilver finished. No resets or errors in the system logs * Did a second resilver. Finished without errors again * Resilver while connected to the chipset-provided USB port takes around 18 hours for the same disk that took over 30 hours via the CPU-provided port ## Verdict so far ~~The OWC passed all of the testing so far with flying colors.~~ Even though resilver finished successfully, there were silent USB resets in the logs with the OWC connected to CPU-provided ports. Multiple ports exhibited the same behavior. When connected to a B350 chipset-provided port on the other hand the OWC finished two resilvers with no resets and faster, 18 hours vs 32 hours. My hypothesis is that these silent resers are likely related to the known USB problems with Ryzen CPUs. The OWC itself passed testing with flying colors when connected to a chipset port. I'm buying another one for the new disks. ## Pics ### General        ### PSU

Swapped out the stock Gateron Brown switches for MX2A Ergo Clear. Added o-rings to shorten the key travel to 2.5-3mm. The result feels dramatically different. Individual key presses feel amazing. I'm not sure about typing yet.

It's a very interesting feel, unlike any other mechanical keyboard I've had. I've been typing on DSA for over half a decade now so I'm used to the flat row profile but the increased surface size still messes with my brain bit. I like it. There's some similarity to the feel of a laptop keyboard. More info on [SP G20.](https://spkeyboards.com/pages/g20-keycap-sets)

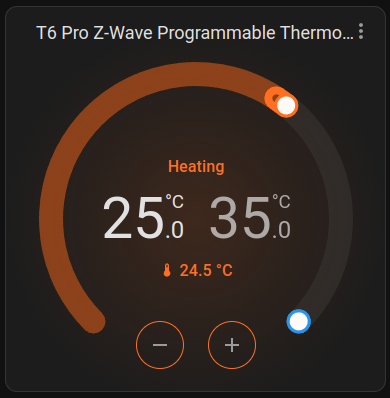

> Here we go. The setup was pretty trivial. The setup for the Zooz GPIO Z-Wave adapter for Yellow was trivial. Adding the T6 was trivial too. I had to install 2x Z-Wave smart plugs to extend the network from where the Yellow is to where the thermostat is. I used Leviton Z-Wave smart plugs. Finally I added the automation I wanted this whole thing for. Seems to work ™ > > The only downside I can see so far is that the T6 doesn’t support multi-speed fan (G1/G2/G3 wiring) so I had to choose one of the speeds while wiring and I can’t use the rest. From what I can tell Ecobee seems to be able to use G1/2/3 but I’m not ready to give up on the ethernet-independent operation T6 and Z-Wave allow to have multiple fan speeds. > > Does anyone know if there’s a (non-retail) variant of the T6 that supports multi-speed fan? I needed some thermostat automation done and I stumbled upon [this thread.](https://community.home-assistant.io/t/which-thermostat-is-most-home-assistant-friendly-ecobee/632022) I just attempted this and it went about as smoothly as I can imagine. If you're also in need of an offline solution, the Z-Wave version of the Honeywell T6 seems to do the job. #homeassistant #zwave #thermostat #homeautomation

> Waste sitting in pits could fill almost 883,000 Olympic-size swimming pools, and oil companies say they need to find a way to reduce it > The companies, including an affiliate of Exxon Mobil, are lobbying the Canadian government to set rules that would allow them to treat the waste and release it into the Athabasca River by 2025, so they have enough time to meet their commitments to eventually close the mines. Of course they are.

arstechnica.com

arstechnica.com

> An early experiment suggests that an injection of klotho improves working memory.